Project 3: Illuminate (Algo Answers)

You can find the handout for this project here. Please skim the handout before your Algo Section!

You may look through the questions below before your section, but we will work through each question together, so make sure to attend section and participate to get full credit!

Raytracing Pipeline

As a group, come up with four or more aspects of the fully fledged raytracing pipeline that were omitted in Intersect, but are required for Illuminate. Discuss how you can use your code from Intersect to implement these components.

There is not necessarily a correct answer! We are looking for a qualitative explanation of your approach. Share your ideas with the TA before moving on.

There isn't a correct answer.

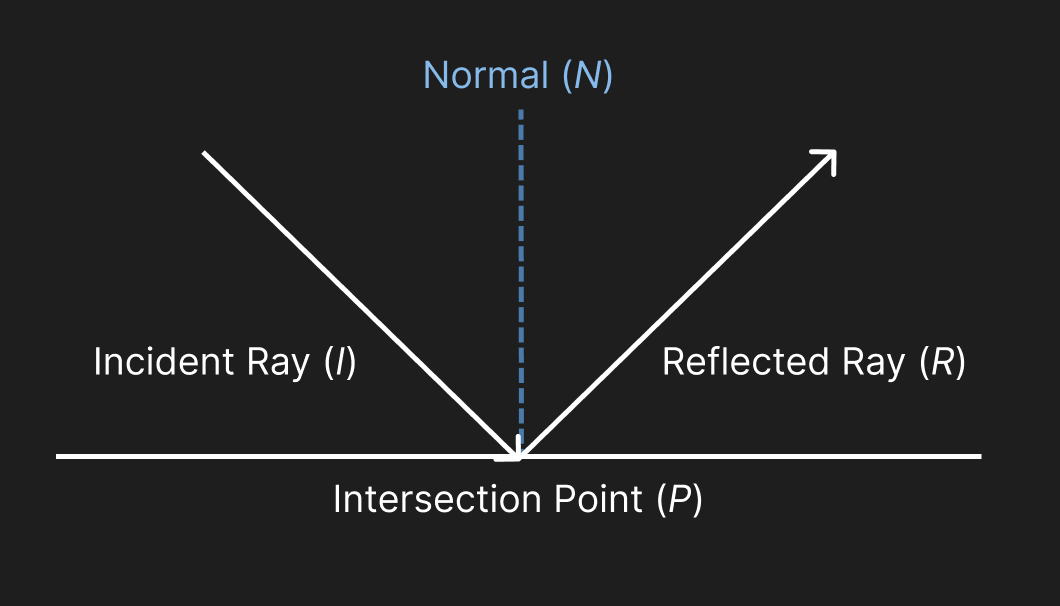

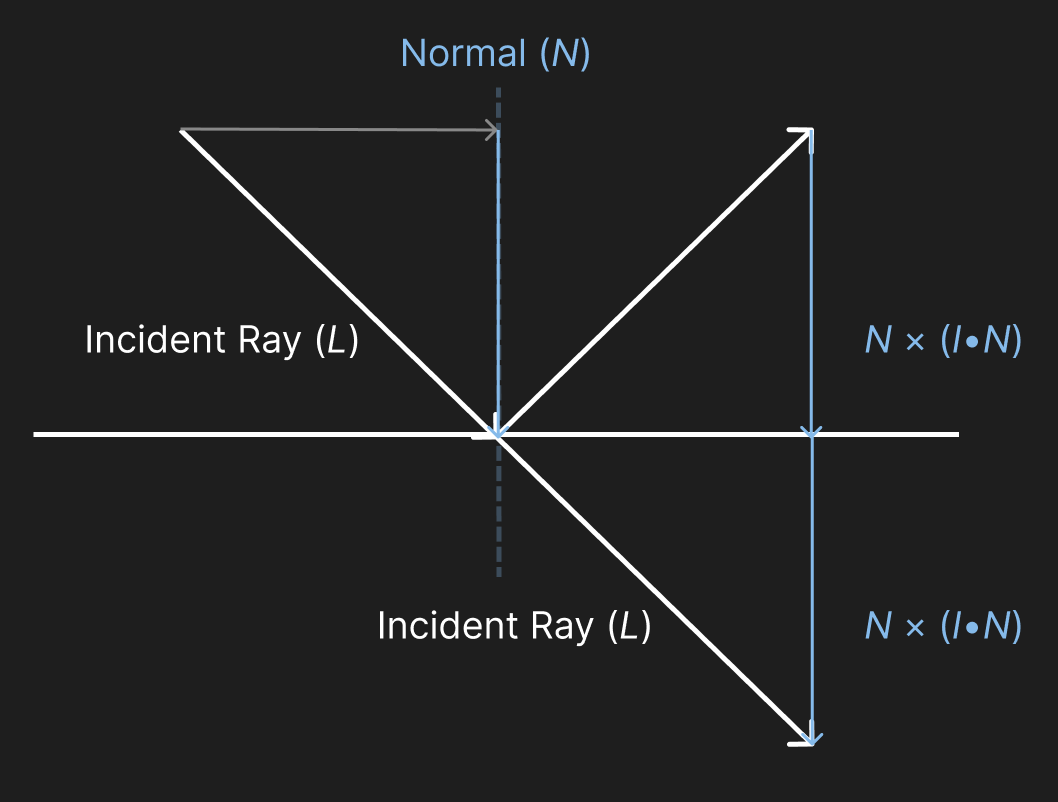

Reflection Equation

Given an incoming incident ray

Assuming both

If you get stuck, try drawing out a diagram on the whiteboard with your group! How might you break down

?

Answer:

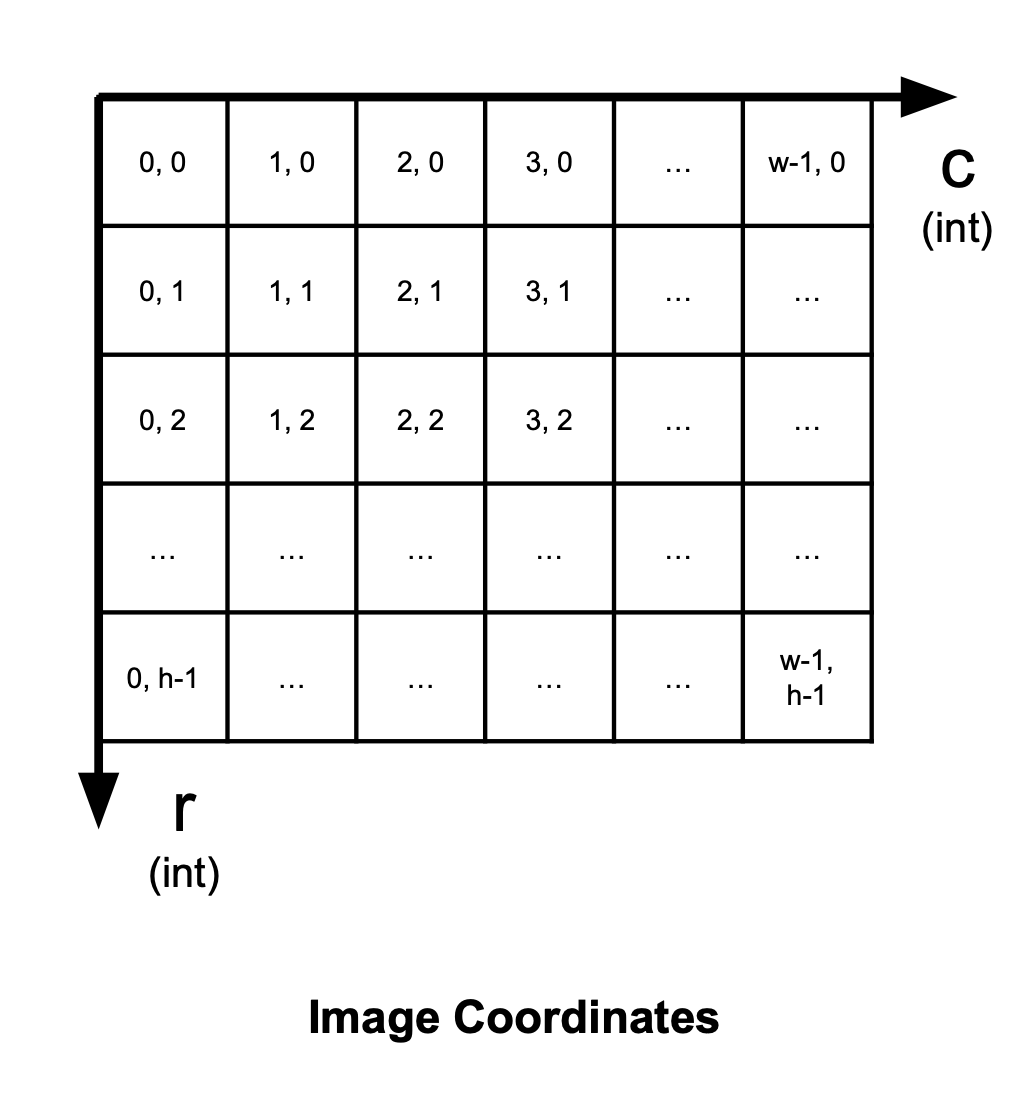

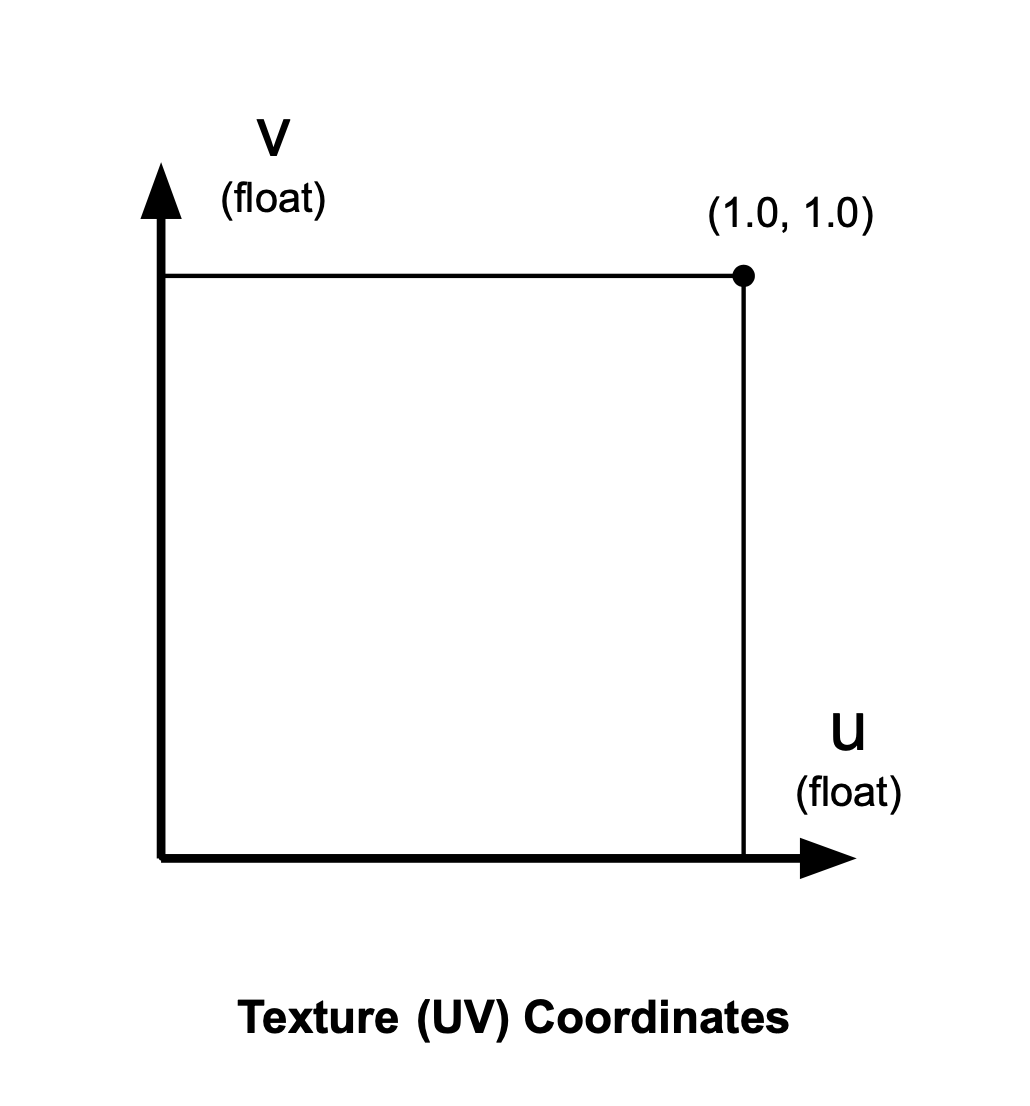

Texture Coordinates

In Illuminate, you will be texture-mapping images onto your implicit shapes. One step of this process is taking a given intersection point, with known UV coordinates, and figuring out which pixel of the image we should use.

However, two different coordinate systems are in play here:

- Image coordinates have their origin at the top left of a

width x heightpixel grid. These are used for images like our Brush canvas, our Intersect output, and, most relevantly, images we use as textures. - Texture (UV) coordinates have their origin at the bottom left of a unit square. These are used on the surfaces of our objects, as inputs to the equations you will write below.

Make sure you are comfortable with the diagram below, then work through the next two questions, where you will define a mapping from UV coordinates to image coordinates.

A Single Texture

Suppose we have an image of size

What is the pixel index

Answer:

Note that you'll have to check for cases where

Explanation:

We can think of this as a map from continuous UV coordinates that range in

- We can simply scale and floor

to obtain . - However, since UV coordinates use the bottom-left corner as their origin, while images use the top-left corner, we have to "flip"

before scaling and flooring.

Repeated Textures

Suppose that we'd now like to repeat the texture

Now, what is the pixel index

Answer:

Again, you'll have to check for cases where

Explanation:

We can think of this as a map from continuous UV coordinates that range in

- See the previous question's answer for an explanation.

- Note that it's necessary to take the moduli of the floored indices, to get the actual pixel indices for the texture image.

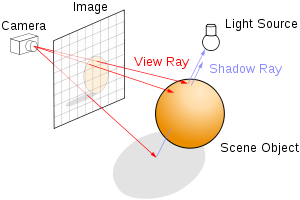

Shadow Generation

To test if some intersection point

Suppose point

Does your answer change if we are restricted to the primitives we use in this course? Discuss with your group.

Answer:

Conditionally, yes. If object

Note 1: while it happens that none of the primitives you're using are concave, you are not obligated to optimize-out the shadow ray's intersection test with the original object.

Note 2: you do not want to detect erroneous intersections with the exact point on object

Submission

Algo Sections are graded on attendance and participation, so make sure the TAs know you're there!