Project 6: Action! (Algo Answers)

You can find the handout for this project here. Please skim the handout before your Algo Section!

You may look through the questions below before your section, but we will work through each question together, so make sure to attend section and participate to get full credit!

Camera Rotation

How would you compute the matrix for a rotation of 90 degrees about the axis

Once you have an idea of what to do, we recommend working through the math as a group!

Answer:

Explanation:

Recall Rodrigues' formula for an angle

Shader Uniforms

Given a texture object of m_tex1 and a shader object m_shader, please write pseudocode to set m_tex1 to a uniform variable declared as uniform sampler2D texture1 contained in m_shader's source code. Assume nothing about the current state.

Not every answer will be identical, so discuss your thought process as a group, and be ready to share!

Answer:

Optional for setting uniform but required for drawing:

set active texture to GL_TEXTURE0

bind m_tex1 to target GL_TEXTURE_2D

Required to set uniform and for drawing:

store the location of texture1 from m_shader in a variable

set a single integer uniform at location found above to be set to 0

Explanation:

If we assume nothing about the state and wish to draw, we must both bind the texture to texture slot: GL_TEXTUREn and set the sampler2D uniform to an integer value of n.

Textures, Renderbuffers, Framebuffers Oh My!

Concept

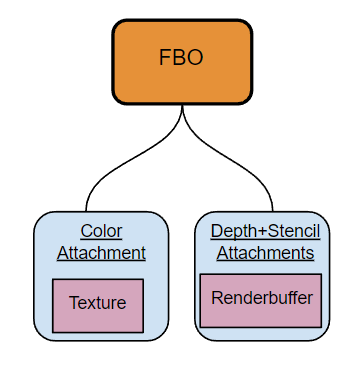

For a framebuffer with color and depth attachments, please draw a schematic/diagram of the framebuffer on the whiteboard. Make sure to indicate which attachments are textures and which are renderbuffers, and be prepared to explain to your TA. Assume the usage of the framebuffer will be to draw a scene with a grayscale filter applied.

Answer:

Explanation:

A texture was choosen for the color buffer since we have to specifically sample from it in order to apply our grayscale filter. A renderbuffer was choosen for the depth buffer since we don't need to sample from it, therefore it is more optimal to only use a renderbuffer to store the depth attachment.

Functional Understanding

glTexImage2D

With your group, explain the difference between internal format and format in the call glTexImage2D.

Answer:

Internal format represents the format we wish to hold in our newly created texture. Format specifies what the format is of the input pixels if any so that OpenGL can read them in properly. For creating a texture in an FBO, the format parameter doesn't matter since we pass in a nullptr for the pixel data, but for a texture such as m_kitten_texture in the lab, we must specify this in order to read in the QImage properly.

glTexParameteri

Now, discuss the difference between using GL_NEAREST and GL_LINEAR when setting magnification and minification filters in glTexParameteri. Explain how these two methods might result in different output colors when sampling from an image.

Answer:

GL_NEAREST when sampling between pixels will not compute any blending and choose a discrete pixel to sample from at any point. As the name implies it picks the nearest pixel to use for data. GL_LINEAR on the other hand uses linear interpolation when sampling between pixels in order to pick an output color.

Realtime vs. Ray

Recursive Reflections

Why do you think we are not implementing recursive reflections in this assignment? There are many correct answers here! Discuss with your group, and consider the limitations of OpenGL in comparison to our earlier assignments.

Answer:

Recursive reflections require us to know scene data in order to shoot out rays recursively. However once we render once, we only have a 2D image to reference and it is impossible to do true reflection since we don't have this data. There are some common methods of imitating reflection such as "screen-space reflections", but as the name implies, you can only reflect what you see on the screen. So for example if there was one sphere on screen and one to the right offscreen, it would not be able to reflect the offscreen sphere onto the on screen sphere since that data was lost.

Differences

Apart from recursive reflections, what are the advantages to using offline rendering as opposed to realtime rendering? What are the advantages to using realtime rendering as opposed to offline? Talk through some advantages of each side and share them with your TA.

Explanation:

Raytracing allows us to simulate physical lighting effects in a much simpler way such as soft shadows. Many computations are actually easier to understand conceptually from a raytracing perspective as well such as shadows (vs shadow mapping) and how different BSDFs behave.

On the other hand, realtime rendering at the moment is much more performant and allows us to use parallelization without even having to work for it!

Submission

Algo Sections are graded on attendance and participation, so make sure the TAs know you're there!