Project 5: Lights, Camera

When cloning the stencil code for this assignment, be sure to clone submodules. You can do this by running

git clone --recurse-submodules <repo-url>

Or, if you have already cloned the repo, you can run

git submodule update --init --recursive

You can find the section handout for this project here.

Please read this project handout before going to algo section!

Introduction

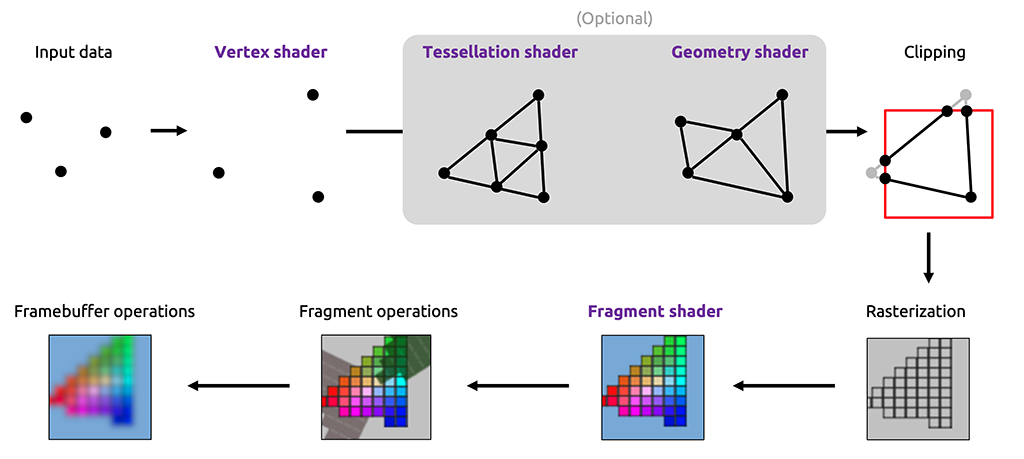

In the Ray assignments, you implemented a ray tracer that projects a 3-dimensional scene on a 2-dimensional plane. Ray tracing, as you probably have experienced, can be very slow.

In this assignment, you will design a real-time scene viewer using components from previous labs including Parsing, Transformations, Trimeshes, VAOs, and Shaders.

Requirements

Parsing the scene

Similarly to Ray, you will use the same scene parser from Lab 5: Parsing to read in scenefiles. You are expected to call your scene parser to get your metadata and set up the scene as you see fit when new scenes are loaded in. Note that you will no longer be using .ini files and will rather just use .json files directly!

Refer to section 3.1 for more information on how to work with the parser and deal with scene changes in the codebase.

Shape tessellation

In Lab 8: Trimeshes, you should have implemented tessellation for two shapes: cube and sphere. In this project, you are expected to also include tessellation for cone and cylinder. The descriptions of these shapes remain the same as in Project 3: Intersect:

- Cube: A unit cube (i.e. sides of length 1) centered at the origin.

- Sphere: A sphere centered at the origin with radius 0.5.

- Cone: A cone centered at the origin with height 1 whose bottom cap has radius 0.5.

- Cylinder: A cylinder centered at the origin with height 1 whose top and bottom caps have radius 0.5.

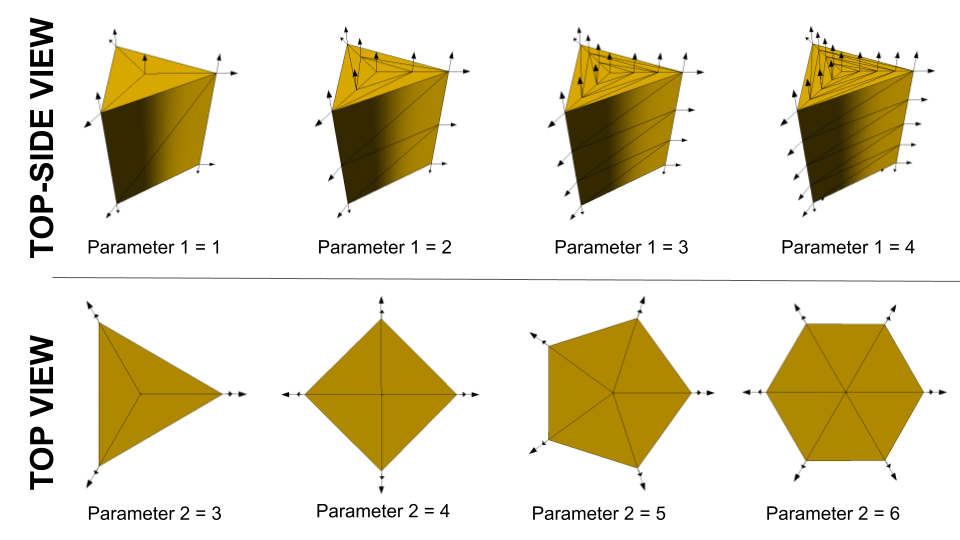

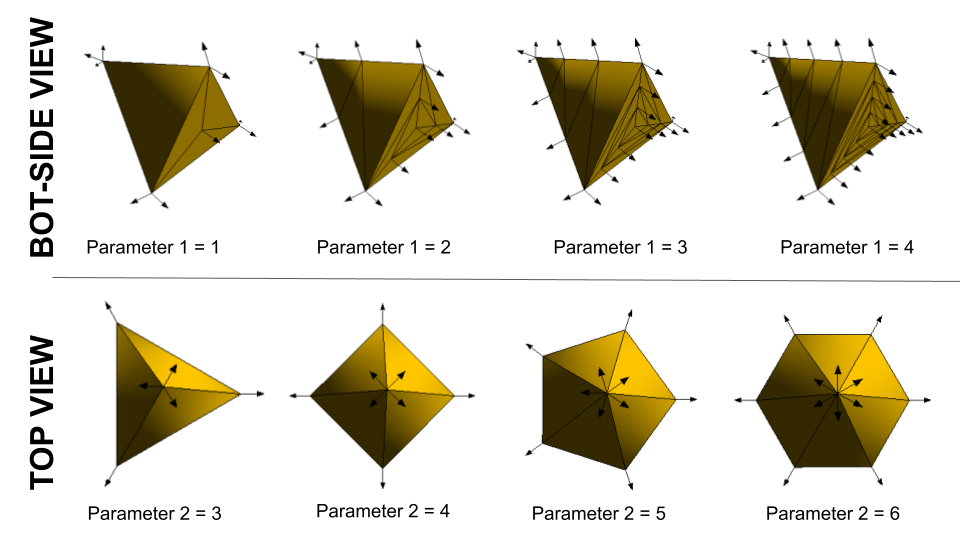

The tessellation parameters should control the vertical and radial tessellations just as they do for the sphere. As a reminder, parameter 1 controls tessellation along the latitude direction while parameter 2 controls tessellation along the longitude direction.

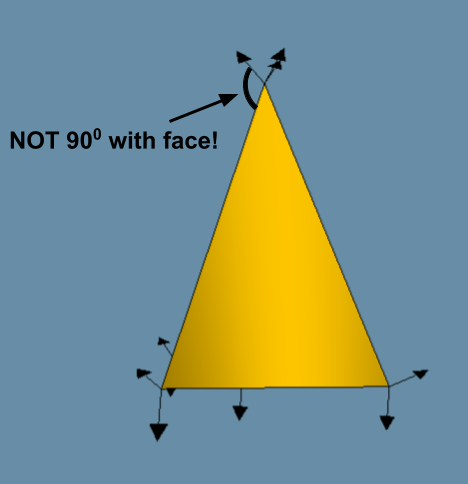

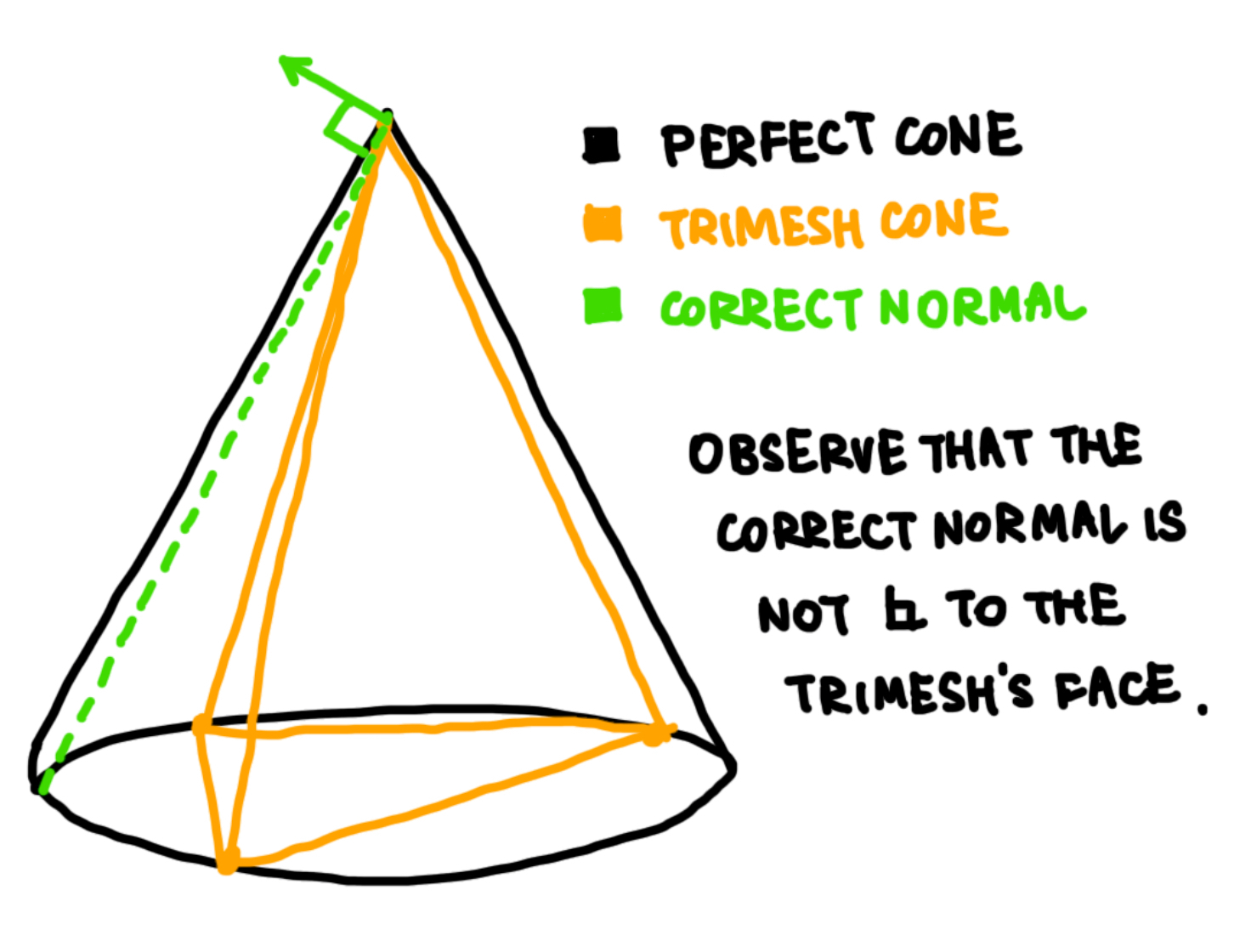

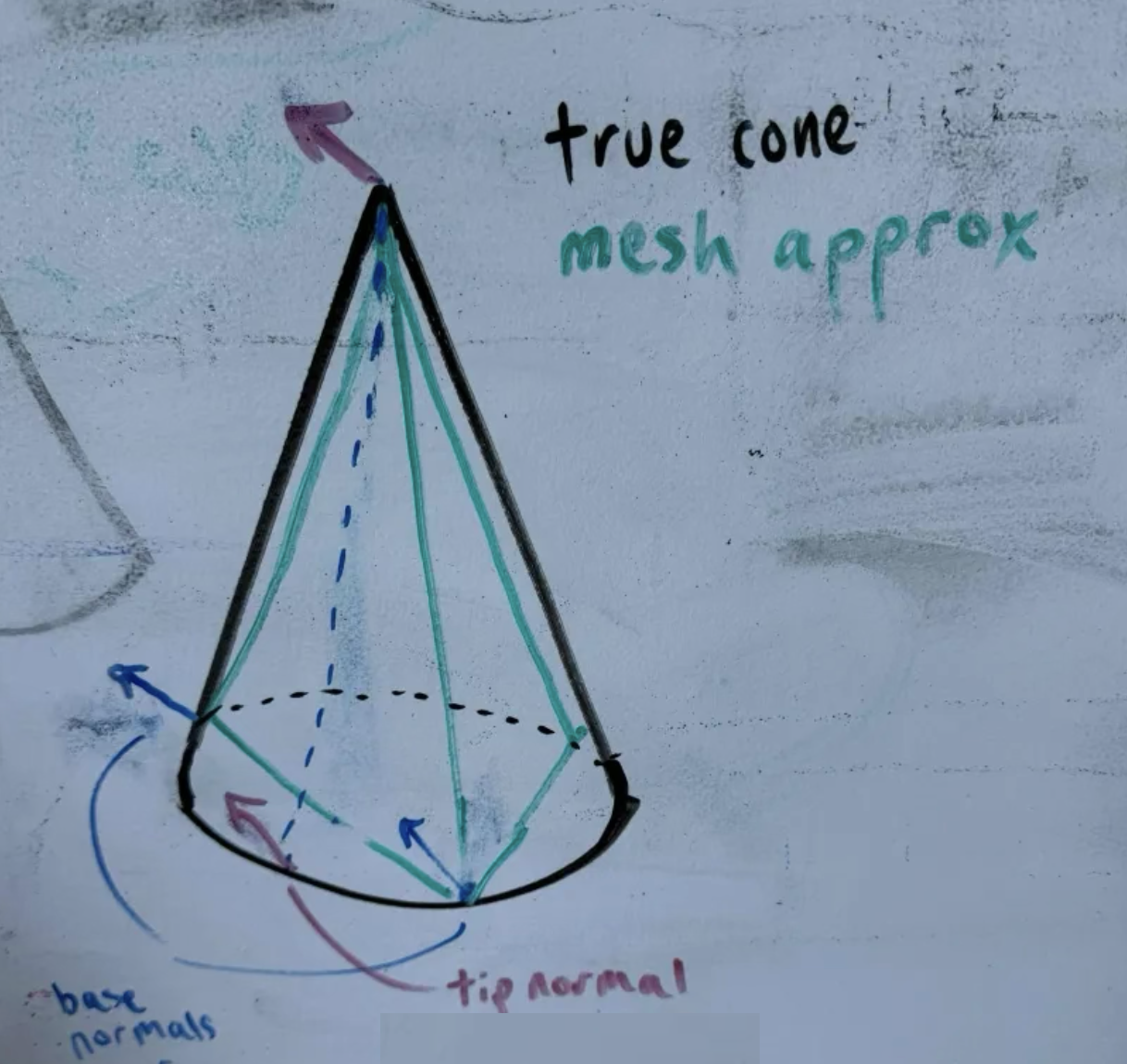

Be especially careful when calculating normals for the tip of the cone: make sure they are always inline with one on the implicit cone, but NOT any face normal, which would result in flat shading, nor pointing straight up, which can be the edge case for your ray tracer.

Correct Cone Tip Normal Diagram

Hint about cone tip normal calculation

One valid approach to calculating normals at the cone tip is to take the average of the two normals of the base triangle (remember to normalize it afterwards!).

More detailed explanation:

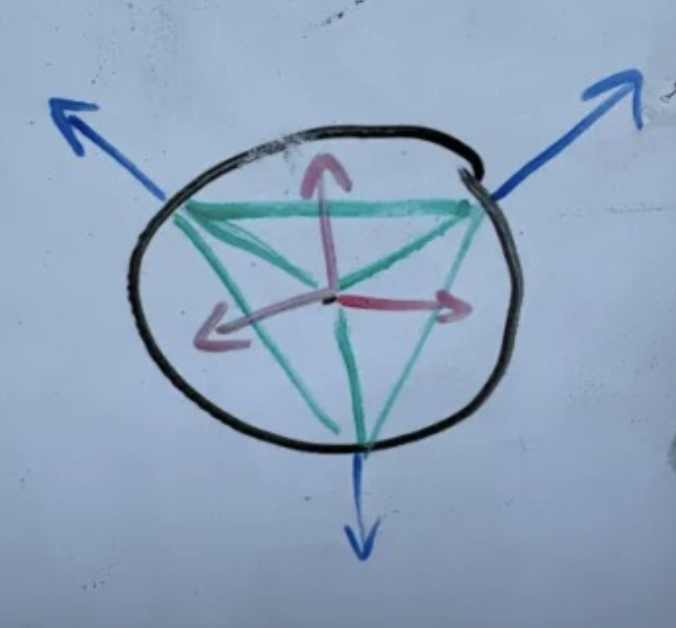

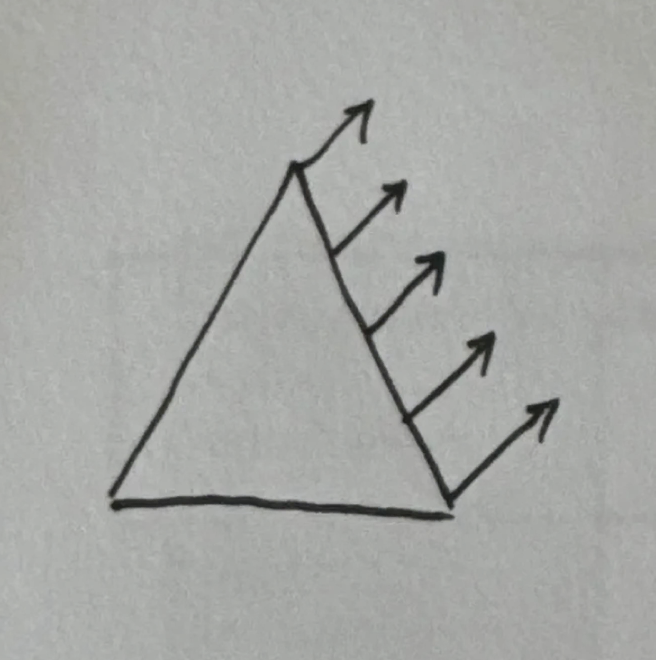

Figure 4.6 shows that the cone tip normal lies halfway between the two face normals at the top of the corresponding mesh face. If we were to take a vertical slice of the cone, we would see that the normals all point in the same direction along the surface, going up that slice:

This means that the two base normals of that face are the same as the two normals at the top of the face, so the cone tip normal can be found by taking the average of the base normals.

We recommend working on these shapes in your lab 8 stencil prior to porting them into Project 5: Lights, Camera.

This will allow you to use the visualizer to debug position and normals.

While the specifics of your tessellation code are up to you, you are expected to design your program in an extensible, object-oriented way. This means minimal code duplication and no 400 line branch structures (such as if... else... statements). You will lose points if you do not follow these guidelines.

Your shapes should never disappear when the tessellation parameters are too low! Be sure to set minimum tessellation parameters appropriate to each shape accordingly.

Camera

Your camera for the raytracer only needed to produce a view matrix. For Project 5: Lights, Camera, you must produce both view and projection matrices given the scene file's camera parameters. The projection matrix is needed to convert from camera space to clip space for OpenGL to render the scene correctly.

To implement your projection matrix, you may not use glm::perspective.

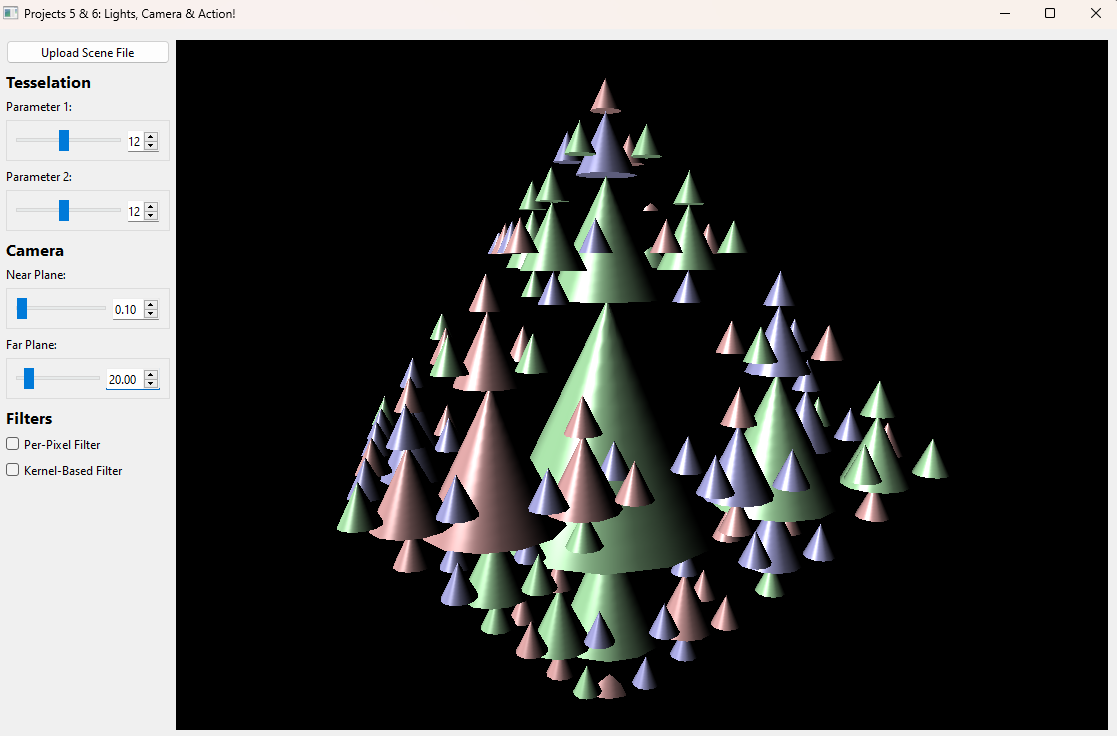

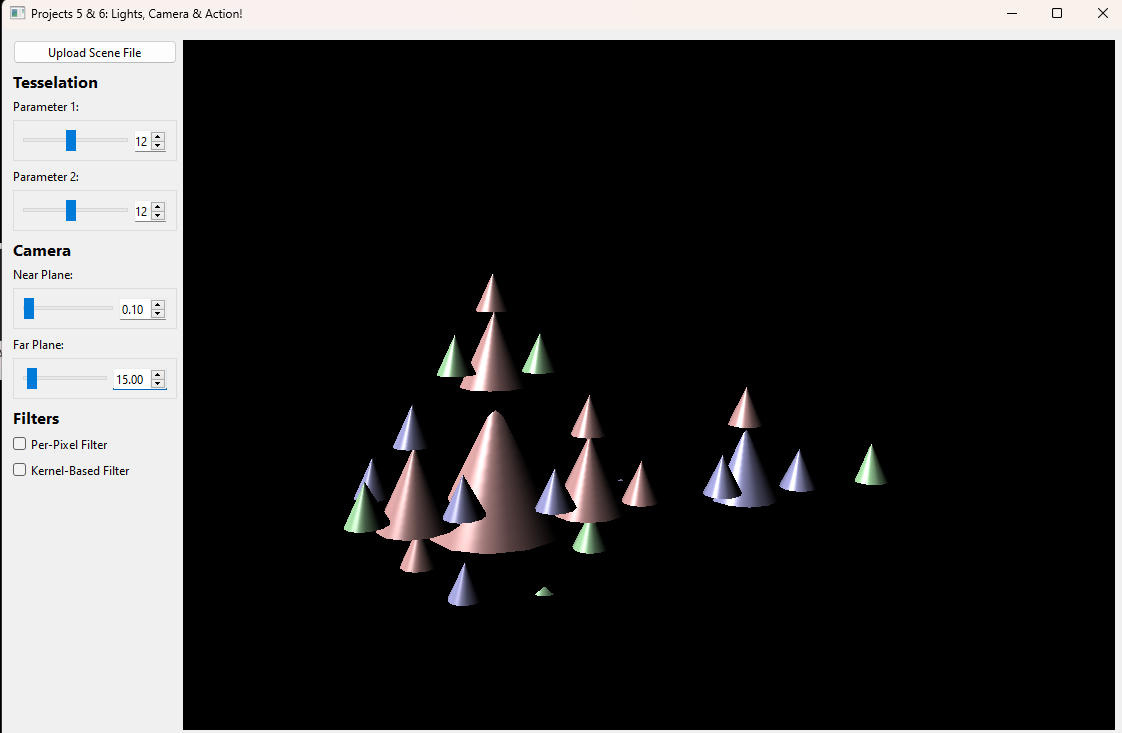

Keep in mind you are able to edit the near & far plane distances in real-time. These are seen in the parameters: settings.nearPlane and settings.farPlane. Be sure to update your camera accordingly when these parameters change!

You will be expanding on your camera's functionality in the next project, so be sure to keep this in mind when implementing your camera.

Data Handling

Welcome to the meat and potatoes of this project!

You will use everything you have learned from lecture and labs to use the OpenGL pipeline to manipulate and keep track of scene data. You will take your parsed scene metadata and use it to construct all necessary VAO/VBO objects. Then you will use these in the main render loop of paintGL to finally render the scene, while integrating materials, global data, and light data as uniforms.

As far as the design goes, some questions you might want to ask are:

- How will I represent my shape data in OpenGL?

- What do my VAOs and VBOs need to be able to do/How can I generalize what I did in lab 9?

- How will I use my parsed RenderData to draw my scene in

paintGL? - How many VBO/VAOs will I need in each scene? Is it dependent on the scene?

- Please do not include more VBOs and VAOs than necessary! For example, two separate VBOs should not store the same data. Points will be deducted for excess memory usage.

In general, filling

realtime.cppwith all of yourgl_____calls is likely bad code design and will make debugging MUCH MORE DIFFICULT!

Shaders

For this project, your shader program should have the following features:

- Support for directional lights

- Ambient, diffuse, and specular intensity computation

- Final color computation integrating both object and light color

- Support for up to 8 simultaneous lights. (See subsection below)

Of course, your shaders must also perform all the necessary coordinate space conversions (think MVP matrices) as well as the illumination model computation. Think about how all of your scene data will integrate with your shaders! Which parts can you do in the Fragment shader, which parts can you do in the Vertex shader?

As such, it is important to have completed Lab 10: Shaders before attempting this part of the project.

Arrays and Structs in GLSL

In Lab 10: Shaders, you learned about various uniforms to use CPU data in a shader. When dealing with multiple identical objects, the common approach is to immediately think about arrays. In GLSL, an array of vec3s looks like this:

uniform vec3 myVectors[8];

Notice how this array is of fixed size 8, specified explicitly in code. This is because GLSL does not support dynamically sized arrays! And it's also why we require you to support an explit number of lights.

You can access element i in this array as follows:

vec3 myithElement = myVectors[i];

The next question you may have is how to actually pass data into a uniform array. For example, to fill in the (x, y, z), you would write the following:

GLint loc = glGetUniformLocation(shaderHandle, "myVectors[" + std::to_string(j) + "]");

glUniform3f(loc, x, y, z);

If you wish to get fancy, you can try using structs as well. They have to be first defined in the shader then declared as a uniform in the following manner:

struct AwesomeStruct

{

int favoriteNumber;

vec3 favoriteColor;

};

uniform AwesomeStruct myStruct;

Accessing the member favoriteColor from the uniform is done as such:

vec3 coolestColor = myStruct.favoriteColor;

To set the color data to a vector (r, g, b), you would write the following:

GLint loc = glGetUniformLocation(shaderHandle, "myStruct.favoriteColor");

glUniform3f(loc, r, g, b);

If you wish to read more about uniform in GLSL, check out this link!

Special tip about GLSL

In GLSL, pow(x, y) is undefined for shininess = 0! Check the official document to learn more.

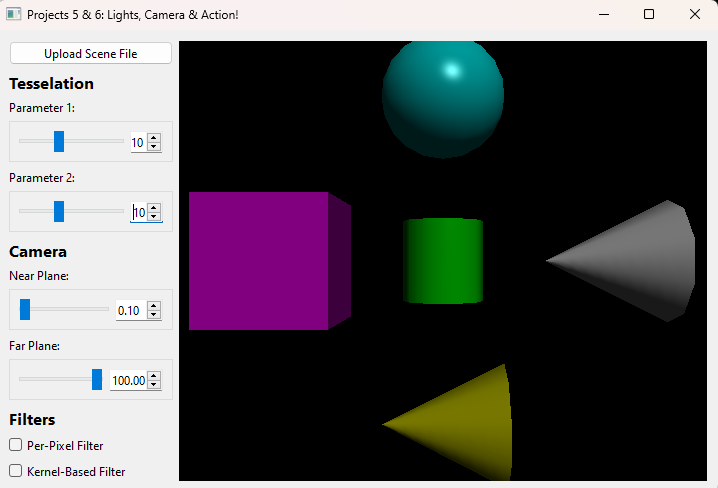

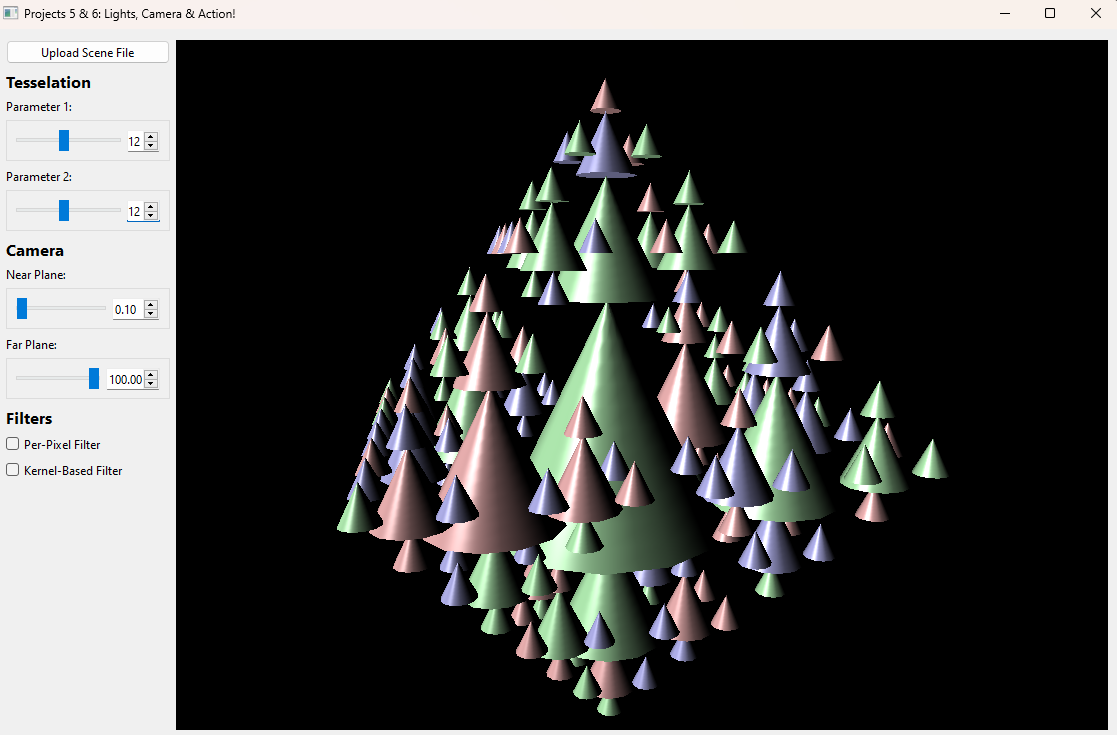

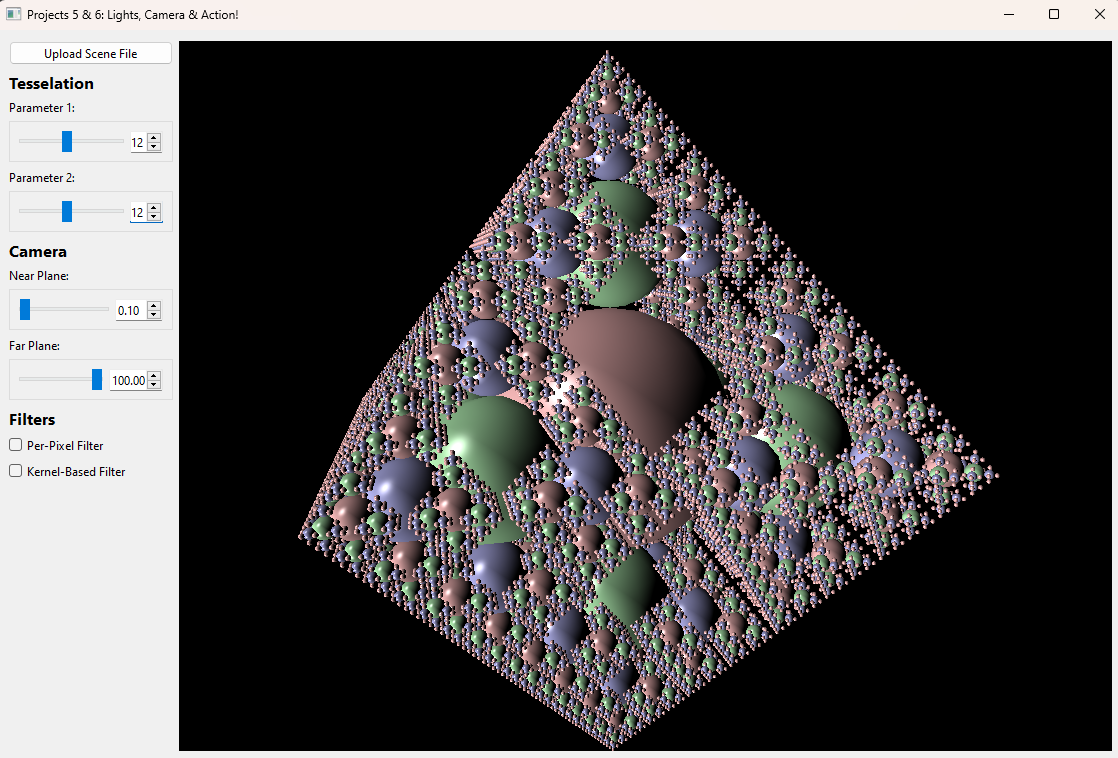

Results

Here are some sample images of what your realtime renderer should be capable of by the end of this assignment.

Stencil Code

You may notice the stencil code provided is minimal--that is by design. To complete this assignment, you will need to have a good understanding of the OpenGL pipeline.

We have provided for you the following files which you will interact with:

Realtime: A file containing the initialization of an OpenGL context as well as functions that are automatically called on certain events. These include:initializeGL,paintGL, andresizeGL.Settings: initialized from an.xmlfile along with any gui sliders/checkboxes which are updated in realtime.

And you have already written the following:

Working with GLEW and OpenGL in Qt:

If you are working in a file and need to use OpenGL functions, make sure to use #include <GL/glew.h>!

Also, Qt creator gives you access to the OpenGL context when calls stem from any of: Realtime::initializeGL(), Realtime::paintGL(),

and Realtime::resizeGL(). If you wish to make OpenGL calls stemming from outside these functions (for example Realtime::sceneChanged()),

you must call makeCurrent() first.

Loading Scenes

As stated before, you will need to handle the loading of scenes using your scene parser from lab 5.

We have provided for you a helper function in realtime.cpp for you to use for this purpose titled sceneChanged().

This function will be called whenever the "Upload Scene File" button is pressed and a .json file is selected.

To get access to the current scenefile, you can use the settings object's sceneFilePath parameter.

Important: We will not be working with .ini files in this project! Given the real-time nature of this

project, settings and parameters will be controlled by interactive UI buttons and sliders instead.

Realtime::initializeGL(), Realtime::paintGL(), & Realtime::resizeGL()

These functions are the "core" of a rendering system. They are overriden from the parent QOpenGLWidget class if you are interested.

-

intializeGL()is called once near the start of the program after the constructor ofRealtimehas been called. It also is called before the first calls topaintGL()orresizeGL(). However, you cannot use any draw calls in this function. Rather, use it to initialize any OpenGL related information you may need, after the GLEW initialization calls as commented. Note that you cannot use any OpenGL-related functions in the constructor of this class as they are only available once GLEW has been initialized. -

paintGL()is called whenever the OpenGL context changes, i.e. when you make some state-altering OpenGL call. You won't have to worry about this for this project, but keep in mind this behavior when you add interactivity inProject 6: Action!. -

resizeGL()is called whenever the window is resized. You will need to use the inputwidthandheightto correctly update your camera.

Realtime::finish()

In OpenGL, we often use calls of the form glGen______. Just as with using the keyword new, we must delete this generated memory as well.

This function, finish(), will be called just before the program exits so be sure to use it to your advantage to avoid memory leaks!

Realtime::settingsChanged()

In general, this function will be called any time a parameter of the settings is changed (via interacting with the left GUI bar) other

than settings.sceneFilePath.

For this project, the settings you will have to worry about are:

settings.nearPlane: Should control your camera's near clipping plane.settings.farPlane: Should control your camera's far clipping plane.settings.shapeParameter1: Should control the tessellation parameter 1 as described in section 2.2 above.settings.shapeParameter2: Should control the tessellation parameter 2 as described in section 2.2 above.

Realtime::____event() Functions

These functions are all for handling interactivity which you will implement in Project 6: Action!.

So do not worry about these for now!

Scenes Viewer

To assist with creating and modifying scene files, we have made a web viewer called Scenes. From this site, you are able to upload scenefiles or start from a template, modify properties, then download the scene JSON to render with your raytracer.

We hope that this is a fun and helpful tool as you implement the rest of the projects in the course which all use this scenefile format!

For more information, here is our published documentation for the JSON scenefile format and a tutorial for using Scenes.

TA Demos

Demos of the TA solution are available in this Google Drive folder titled projects_lightscamera_min.

macOS Warning: "____ cannot be opened because the developer cannot be verified."

If you see this warning, don't eject the disk image. You can allow the application to be opened from your system settings:

Settings > Privacy & Security > Security > "____ was blocked from use because it is not from an identified developer." > Click "Allow Anyway"

Submission

Your repo should include a submission template file in Markdown format with the filename submission-lights-camera.md. We provide the exact scenefiles

you should use to generate the outputs. You should also list some basic information about your design choices, the names of students you collaborated with, any

known bugs, and the extra credit you've implemented.

For extra credit, please describe what you've done and point out the related part of your code. We have provided for you 4 different booleans in Settings for you to use for extra credit: extraCredit1, extraCredit2, extraCredit3 , and extraCredit4. These are activated by their respective GUI checkboxes. If you implement any extra features using a GUI "Extra Credit #" checkbox to be activated, please also document it accordingly so that te TAs won't miss anything when grading your assignment.

Grading

This project is out of 100 points:

- Camera data (10 points)

- View matrix (2 points)

- Projection matrix (8 points)

- Shape implementations (30 points)

- Cube (5 points)

- Sphere (5 points)

- Cone (10 points)

- Cylinder (10 points)

- Shaders (30 points)

- Phong illumination (20 points)

- Support for multiple lights (10 points)

- Tessellation (responds to changes in parameters) (10 points)

- Software engineering, efficiency, & stability (20 points)

Remember that filling out the submission-lights-camera.md template is critical for grading. You will be penalized if you do not fill it out.

Extra Credit

Remember that half-credit requirements count as extra credit if you are not enrolled in the half-credit course.

-

Adaptive level of detail: Vary the degree of tessellation for your shapes based on:

- The number of objects in the scene (up to 3 points): Scenes with more objects have fewer triangles, to keep the scene from getting too complex.

- Distance from the object to the camera (up to 7 points): Objects farther from the camera should have fewer triangles than objects closer to the camera.

Your shapes should still vary in tessellation based on the slider parameters.

-

Create your own scene file (up to 2 points): Create your own scene by writing your own scene file. Refer to the provided scenefiles and to the scene file documentation for examples/information on how these files are structured. Your scene should be interesting enough to be considered as extra credit (in other words, placing two cubes on top of each other is not interesting enough, but building a snowman with a face would be interesting). If you already received extra credit for a custom scene file in the Intersect project, you cannot receive extra credit for the same scene again.

-

Mesh rendering:

- Using pre-written

objloader/parser (up to 4 points): Using a pre-existingobjloader, create trimeshes from any mesh objects and render them in their appropriate scenes. - Using self-written

objloader/parser (up to 8 points): To recieve a bonus 4 points, write theobjloader you use from scratch!

- Using pre-written

If you come up with your own extra credit that is not in this list, be sure to check with a TA or the Professor to verify that it is sufficient.

CS 1234/2230 students must attempt at least 10 points of extra credit.

FAQ & Hints

Theres nothing showing up for scene ___.json, but I know it works on others:

Some scenes only have point lights in the scene; make sure the scene has directional lights.

My shapes dissappear after adding Phong lighting:

There are 2 approaches to solving this:

-

Remove lighting components one at a time/build up lighting components one at a time until you see your output appear or dissapear.

-

Pick out a few parameters calculated throught your phong shader and use them as the output color. Is it what you expect? These parameters could include normal vectors, diffuse dot products, light directions, or virtually anything you can think of!

My shapes look like a mess of triangles even though I know they work in the Trimeshes Lab stencil:

- Make sure you are initializing your

glm::mat4s as the identity matrix explicitly. Also be sure that all of your uniforms are making it to the shader/being set properly.

Submission

Submit your GitHub repo and commit ID for this project to the "Project 5: Lights, Camera (Code)" assignment on Gradescope.